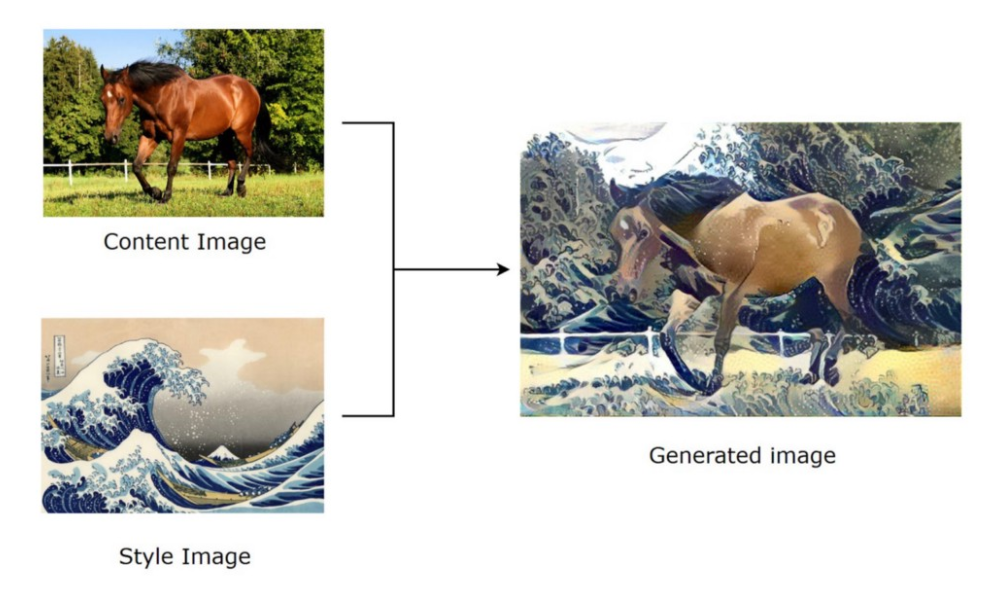

The neural style transfer is to compose a content image and a style image to a generated image which contains the content as well as the style. It let machine become a artist and there is no standard solution but humans aesthetic. In this introduction, you will learn the theory and using the pre-trained model to synthesis from any content images.

Theory

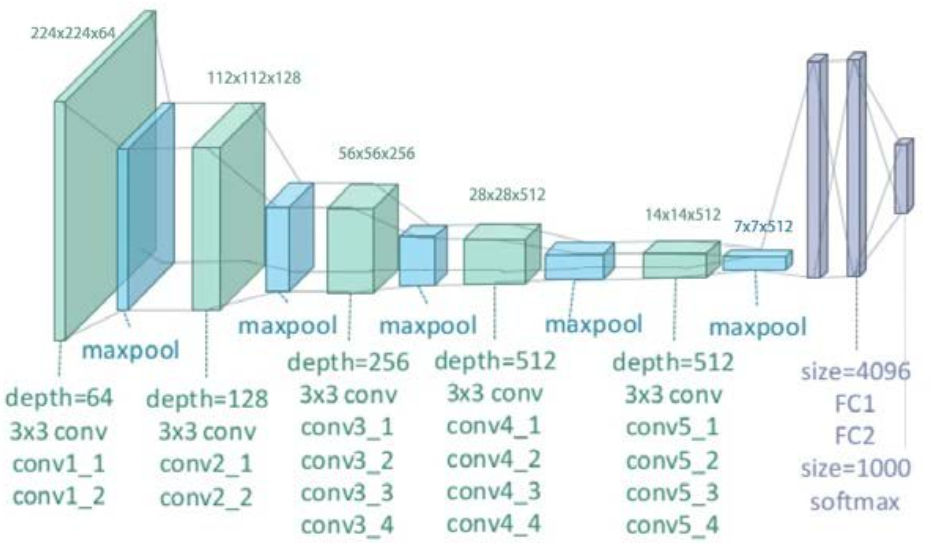

Use VGG19, one of a classical CNN model that contains 5 convolution-pooling pairs for feature extraction, to achieve the goal.

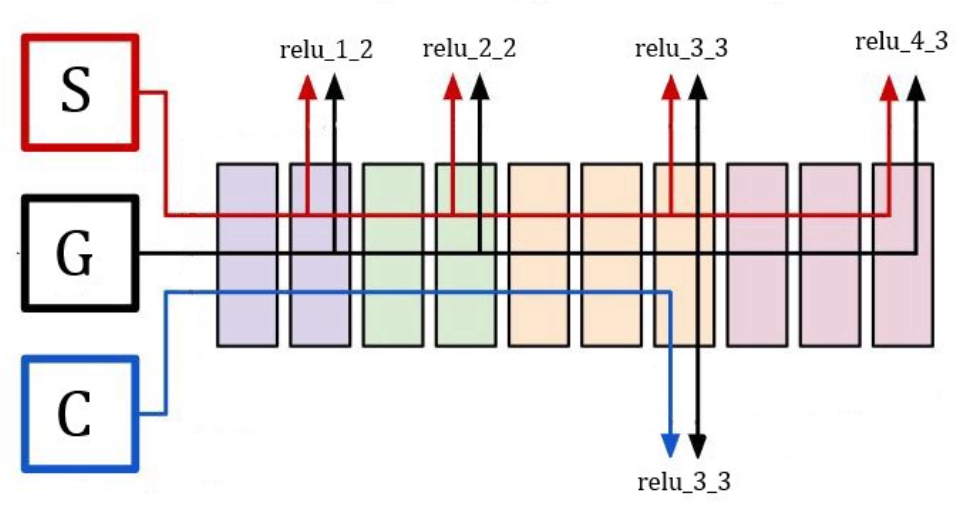

The main difficulty of style transfer is to balance the similarity of generated image G between the content image C and the style image S, so the loss function has the form

To calculate the loss, we need the content image output C3 at the third convolution-pooling layer of CNN and style image outputs S1,S2,S3,S4,S5 at all the convolution-pooling layer.

The losses are

, where Nl denotes the channels of the layers, i.e. N l ∈ [64,128,256,512,512] and Ml denotes the shape of the layers, i.e. Ml ∈ [224×224,112×112,56×56,28×28,14×14]. The “Gram” is Gram matrix, which has the following form

The similarity between content image C3 and generated image G are calculated by the pixel difference because the content feature is more delicate and localized then the style feature. On the contrary, the similarity between style images Sl and the generated image G are calculated by the inner product transformation that extract the style feature such as the texture and lines.

Implementations

The tutorial reference Neural style transfer by tensorflow. The system requirements are

- Python=3.6

- Tensorflow=2.2.0

- Tensorflow_hub=0.11.0

In the beginning, import the packages and define some functions.

1 | import os |

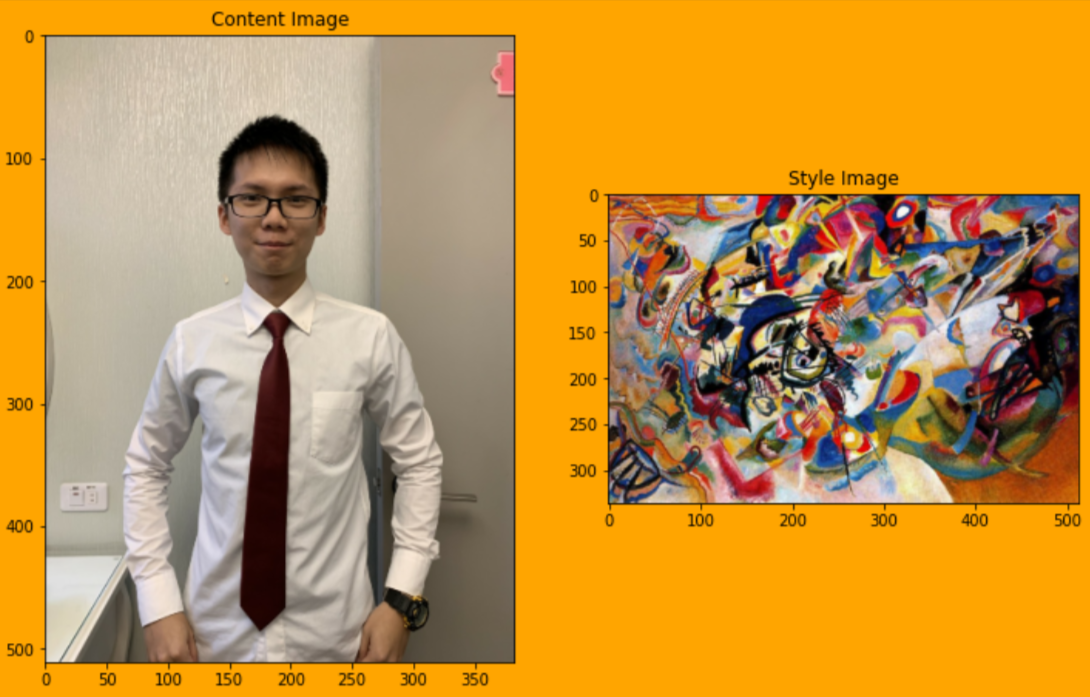

Next, prepare an image or download an example image as the content image as well as download the example style image.

1 | # download example content image |

Then, plot the content image and the style image.

1 | content_image = load_img(content_path) |

Finally, load the model and predict.

1 | hub_model = hub.load('https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2') |